Subscribe now and get the latest podcast releases delivered straight to your inbox.

Facebook and Instagram starting to identify and label 'fake news'

Oct 28, 2019

Unfortunately, with more than two billion active users, Facebook and the rest of the social media landscape makes it extremely easily for fake news to spread like wildfire.

With the 2020 Presidential election quickly approaching, Facebook is starting to implement new strategies to identify fake news — and to help users identify it as well.

On the heels of this initiative, the company recently announced the introduction of Facebook News.

After Mark Zuckerberg’s speech on free expression at Georgetown University, Facebook announced its tactics for proactively detecting and labeling any information deemed false.

How Facebook is trying to reduce the spread of misinformation

Facebook is working to ensure misinformation doesn’t spread, and that people are able to know what’s real by identifying which content is fake.

While Facebook is currently using a third-party as well as machine learning to detect and fact-check fake news, misinformation isn’t prominently displayed on questionable posts or articles.

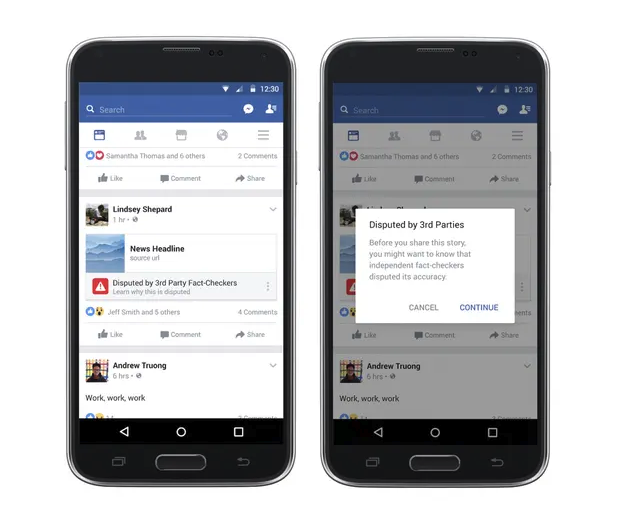

When Facebook initially released its fake news initiative in 2017, it backfired. The company found that people were more likely to click and share the articles marked as fake. While the content is tagged, it’s definitely something that can be overlooked by users; check out the image below.

Image Source: The Guardian

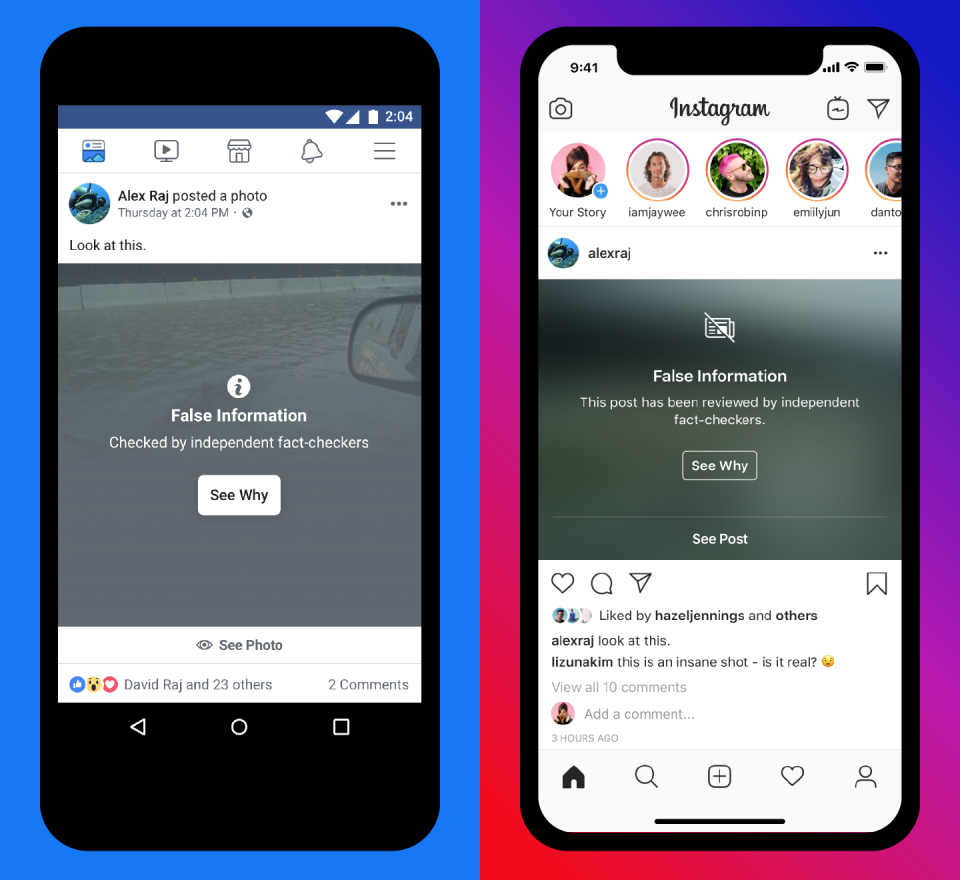

In the next month, you will start to see Facebook implement new labels that will make fact-checking more transparent — ensuring people clearly know when something has been fact-checked. If that content is marked false, labels will be shown on top of the false photos or videos, with a link to the fact-checker assessment.

Image Source: Facebook

By implementing these labels, Facebook is allowing users to better decide for themselves what they want to read and share.

These labels are not only being added to posts, but also to Stories.

According to Facebook’s VP of Integrity Guy Rosen, Facebook will work to reduce distribution of content by placing advertising restrictions on Pages and users that continually post misinformation.

This isn’t something that’s only being implemented on Facebook. Instagram is also working to reduce and label any misinformation shared by its users. Similarly, when it comes to accounts that are repeatedly posting misinformation, Instagram will filter content from those accounts from appearing in its Explore and hashtag pages.

While using a third-party fact-checker is effective — and allows the platform to be proactive — there is a limit to using software and machine learning to detect fake news and fact check.

So, what else is Facebook doing?

The introduction of Facebook News

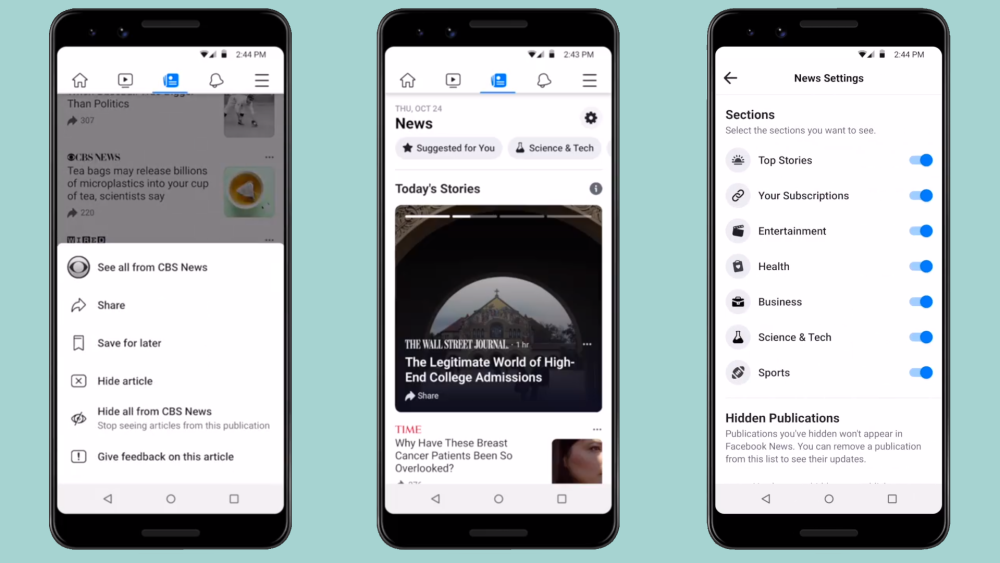

Facebook announced the release of its “News” tab, which will soon be available on it U.S user interface.

Introducing this tab is a way for the company to partner with over 200 credible news organizations to bring in the most relevant stories.

This new tab is also a way for Facebook to monitor and ensure that the publishers and the content being shared follows a set of integrity standards. Content violating those standards would include clickbait, hate speech, spam, and misrepresentation, to name a few.

Image Source: Facebook

Helping people spot misinformation

Aside from using third-party software to detect and fact-check news, the biggest thing Facebook can do is help people identify misinformation themselves.

That is why Facebook will be partnering with various organizations and media literacy experts to give its users the tools to make informed judgments.

Facebook is hoping that people will not only be able to spot fake news on the platform, but also on other social networks and websites.

In the past week, Facebook Newsroom announced that the company is initially going to invest $2 million in projects that support people spotting misinformation.

Some of the projects they’re going to invest in include:

- Training programs for large Facebook and Instagram accounts to help them reduce the distribution of false news

- Launching a program for first-time voters on critical thinking

- Expanding a pilot program for senior citizens and high schoolers to educate them on media literacy and online safety

- Events at libraries, bookstores, and community centers across the country

- Digital and media literacy lessons for middle and high school students, with the help of the Berkman Klein Center for Internet and Society at Harvard University

While all of those tactics are great initiatives for identifying fake news, there’s still the possibility that fake news can make its way across social media. When it comes to your brand, misinformation can also lead to mistrust amongst companies and users.

So, what does this mean for marketers?

Fake news isn’t just something that can affect politicians. There could be serious consequences for your brand’s image.

Especially when a staggering 45% of Americans get their news from social media.

Do you remember the bogus Starbucks campaign from 2017?

Someone from the anonymous online message board 4 Chan posted tweets advertising “Dreamer Day” in an attempt to persuade undocumented immigrants to visit the coffee chain for free drinks. The social media ads included the company’s logo, graphics, and signature font.

The company had to work quickly to counter and remove the seemingly legitimate advertisements.

This isn’t something that has only happened to Starbucks. There have been misinformation distributed about Coca-Cola and Dasani bottled water being infested by parasites, an Xbox console killing a teenager, and Costco ending its membership program.

Not all fake news is used to harm companies, it could also be used to seek financial gain as well as discrediting a company or small business.

Despite all of those examples being from billion-dollar organizations, it’s something that could happen to any company, even with something as small as a fake negative review or post.

As a marketer, it’s important to ensure your company is able to combat and mitigate such threats through social media, the press, and even a legal team (when necessary). Similar to your company having a cybersecurity or security breach protocol, there should be something in place for tackling malicious threats that occur on social media.

It’s also important to know what is being said about your company — from customers, employees, and your audience on social media. If you’re using HubSpot, one thing you could do is set up streams to monitor social posts that mention your company name.

When mitigating threats to your brand online, be sure to remain professional and transparent. Don’t be afraid to share the issue with your audience. Your followers and customers will appreciate the transparency, and it could potentially generate positive feedback and reviews

What are your thoughts on Facebook’s improvements for detecting and reducing misinformation? Let us know in IMPACT Elite!

Order Your Copy of Marcus Sheridan's New Book — Endless Customers!