Subscribe now and get the latest podcast releases delivered straight to your inbox.

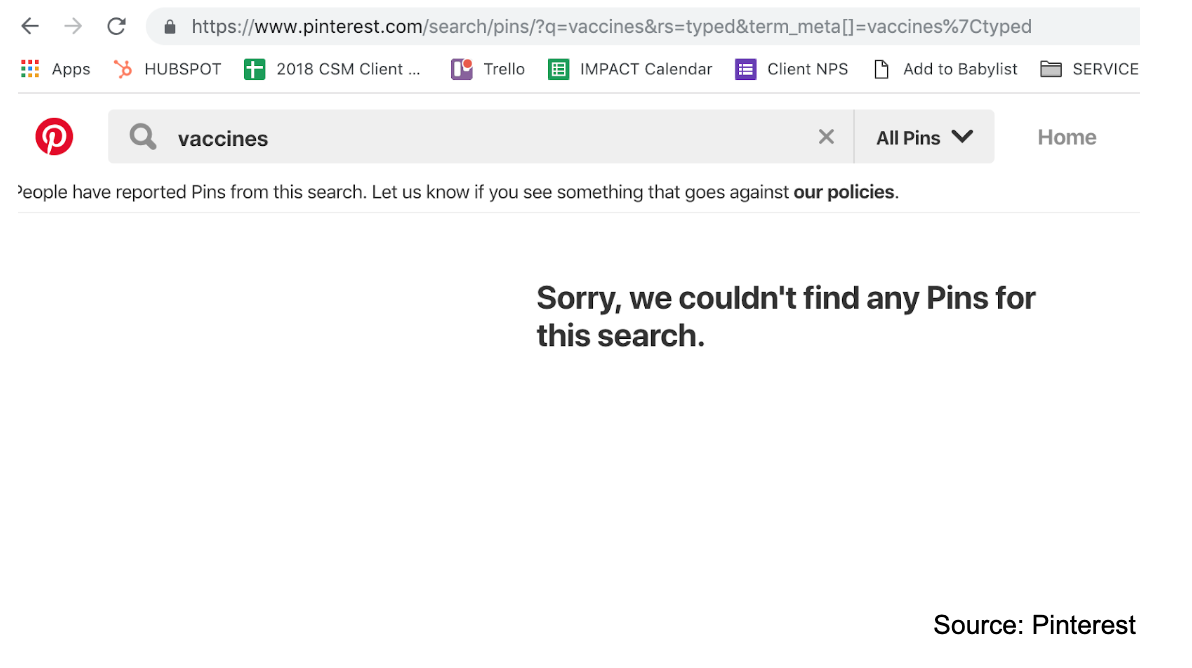

What Pinterest's Regulation of Vaccine Content Hints About the Future of Social Sharing

By Genna Lepore

Feb 25, 2019

As a new mother, I rely heavily on two resources for guidance on parenting.

One - trusted mothers in my inner circle. The other, while a bit dodgy, the internet.

I’ve never been a “search for an ailment, trust WebMD” kind of gal, but when it came time to pick a pediatrician last year, I did do research weighing the pros and cons of vaccinations via the web.

I don’t recall my exact search criteria, but I do remember being bombarded by a slew of sites that were conservatively against vaccinations. While the validity and author of the articles may have been questionable, because of the volume of results, I did second guess whether or not to vaccinate my son.

With that in mind, upon hearing about Pinterest’s new approach to stop false information from being searched on their site, I was stunned and initially, impressed.

This week, Pinterest told the Wall Street Journal that they began to quietly block anti-vaccination search terms in late 2018 from their users in an effort to limit the spread of misleading content.

Meaning, users can still pin content, but the company is actively preventing users from finding it.

Now, it’s important to state here that this doesn’t appear to be a political or social move, but rather one done in an effort to combat the spreading of false information. Considering how much hot water this has gotten other social platforms into (i.e. Facebook), this is a move worth talking about.

Should online platforms take responsibility for the content being posted on their networks?

Facebook and Google claim that they are making strides to reduce anti-vaccination fodder (and other false information), but Pinterest is aggressively taking a stance to abolish it from their platform.

Pinterest’s dramatic stance brings to light a growing trend in big-name platforms that have vowed to eradicate false content searches.

Take YouTube for instance.

The video platform giant has pledged to recommend fewer pieces of content on their platform that may direct users to conspiracy theory or harmful results.

While the company doesn’t clearly define subjects that could be tied to harmful misinformation, some examples where they are taking extra precaution are false claims around 9/11 and serious medical illness cures.

But is this monitoring, blocking, and screening done by social media platforms really necessary?

I do not envy social platforms trying to solve the political and ethical challenges associated with content regulation, but it is the responsibility of these platforms to create an environment conducive to both businesses and users.

The financial viability of online platforms depends on it and a great deal more. But is it really their place?

Living in the United States, I’d like to believe that freedom of expression as a human rights law, enables me the right to peruse and produce whatever content I seek fit.

Shouldn’t the responsibility for content consumption lie in the consumer?

On the other hand, as a mother, with a one-year-old who already navigates an iPad better than myself, I hope online platforms will eventually err with a more cautionary content eye.

Some have argued that regulating online platforms is as important as regulating tobacco or alcohol.

Important conversations take place on social platforms every day. People share their lives and gain so much knowledge from what they read and watch there.

It’s almost like a newspaper. We hold them responsible for monitoring the content that gets published and in many ways social media is taking over in this space.

That said, enforcing regulation isn’t easy. It’s so much more than one social platform like Pinterest blocking searches.

It is about a triad of parties that being private sector companies, multiple government agencies and end users coming together harmoniously to remove harmful or false content.

While Pinterest has taken action against one case of misinformation, it will be interesting to see if/how this expands to others.

What does this mean for YOU?

If regulation of content across social platforms is inevitable, the trickle-down impact onto users and companies is inherently unavoidable.

As online platforms dedicate people, resources, and time to regulating content, the way users and marketers interact on the platforms may change dramatically.

Users and marketers alike will need to be even more dedicated to producing factual, reliable content that isn’t misleading or misinformed.

Platforms will soon likely begin to penalize that content if it falls outside regulation.

Until jurisdiction is determined, we must be cautiously aware and responsible of “fake news” that permeates online platforms and take our own actions not to contribute to it.

And as a mother - there isn’t a better user example - than a new mom who needs to take onus for digesting information with a keen eye for truth.

Order Your Copy of Marcus Sheridan's New Book — Endless Customers!