Subscribe now and get the latest podcast releases delivered straight to your inbox.

Google Publishes New Developer Doc on JavaScript SEO Basics

Jul 30, 2019

In mid-July, Martin Splitt and Lizzi Harvey, both from Google, released documentation for developers that covers the basics of JavaScript SEO.

🕵️ Hey, psst, you! Yes, you! @LizziHarvey and I wrote another doc.

— Martin Splitt @ 🇩🇪 #newdevs #frankenjs (@g33konaut) July 18, 2019

This time it's the 📝 #javascript #seo basics guide 📝 - https://t.co/1c6Zir92Zj

🤓 Happy sharing with your dev friends! 👩💻 pic.twitter.com/PKVKWoHxSq

This section is included inside the Google’s Search Developer’s guide. The guide covers how Google Search processes JavaScript, and the best practices for improving JavaScript web apps for Google Search.

But wait — why is processing JavaScript different than HTML and CSS?

Javascript is a client-side programming language which helps developers make dynamic and interactive web pages by implementing custom client-side scripts. In other words, the actual contents of the page can vary depending on who is looking at it.

Even Google admits that it is difficult to process JavaScript, and search engine crawlers are still working out the best ways to do so.

And with how widespread front-end JavaScript frameworks and Single Page Applications (SPAs) are becoming, this guide update is Google’s attempt to clarify how best to make your site search engine optimized.

How does Googlebot process JavaScript web apps?

I thought we were talking about web pages and not apps...

Well, the line between the two is becoming much more blurred.

You may not think of your website as being a “web app” with things like logging in or user profiles. But many web services and coding frameworks are now bringing functionality like pre-fetching links (so linked pages can load faster) and only reloading content that changes between pages (again — loading pages faster!).

These have typically been in the realm of “web applications” in the past, but they carry a lot of benefits for standard websites as well. So, unless your website is incredibly barebones, it can probably be considered a web app.

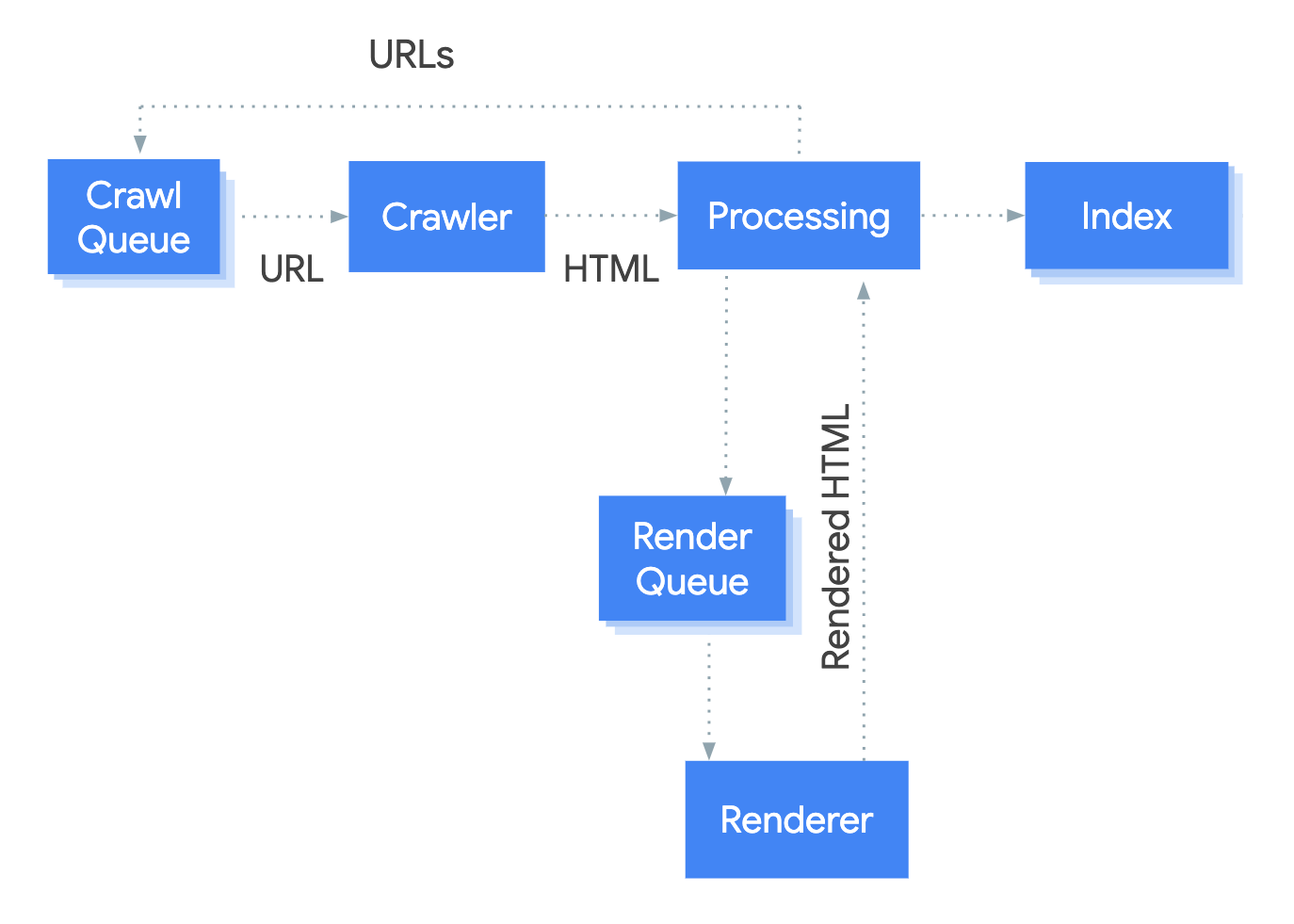

First, Googlebot processes JavaScript web apps in three phases: crawling, rendering, and indexing.

(Source: Google)

- Crawling - Googlebot fetches a URL from the crawling queue and checks if you allow the page to be crawled

- Rendering - During this time, once Googlebot’s resources allow, a headless Chromium (running Chrome in a headless/server environment) renders the page and executes the JavaScript

- Indexing - Googlebots use that rendered HTML to index the page in search results

Next, the guide suggests some simple, basic tips to improve your site’s SEO — with links to more detailed resources:

- Describe your page with unique titles and snippets. Javascript can be used to set or change the meta description as well as the title.

- Write compatible code. Googlebot has some limitations regarding which APIs and JavaScript it supports, so it is important to make sure your code is compatible.

- Use meaningful HTTP status codes. Use a meaningful status to tell Googlebot if a page should not be crawled or indexed, as well as if a page has moved to a new URL.

- Use meta robot tags carefully. Use this if you wish to prevent Googlebot from indexing a page or its following links. (Note: Google states that using JavaScript to change or remove the robot’s meta tag might not work as expected. Googlebot skips rendering and JavaScript execution if the meta robot’s tag initially contains "noindex." If you want to use JavaScript to change the content of the robot’s meta tag, do not set the meta tag's value to "noindex.")

- Fix images and lazy-loaded content. To save on bandwidth and performance, a good solution is to use lazy-loading to only load images when the user is about to see them. Instead of loading everything at once, lazy-loading will defer loading of non-critical resources at page load time, and load them at the moment of need.

Like any other coding languages JavaScript, can have advantages and disadvantages.

It is a powerful tool for developers and can enhance the user experience. Since it runs locally in your browser, it can be a very fast and seamless experience (instead of relying on multiple trips to the server to send and receive information). It is also relatively simple and easy to learn, which makes the barrier to entry (even for non-developers) quite low.

But with those advantages comes some disadvantages as well. The main two are security and browser support. Because it executes on the client’s computer, JavaScript can be exploited for malicious purposes. Different browsers also interpret JavaScript differently, so there can be some deviations.

One other drawback is that JavaScript is sometimes disabled, which means a lot of functionality may not work on the client’s computer at all.

With that being said, your site is probably using JavaScript in some form, so it is important to understand how Googlebot processes it. Making your JavaScript-powered web application discoverable for Google Search will help increase your organic visibility and bring more traffic to your site.

Excited to see what Google decides to add next to expand its JavaScript SEO? Let us know your thoughts in Elite!

Free: Assessment